MCP Problems and How Anthropic Skills Aim to Solve Them (2026)

The rise of powerful Large Language Models (LLMs) has transformed AI from simple Q&A systems into intelligent agents capable of executing complex, multi-step workflows—such as booking flights, resolving customer issues, or orchestrating business processes. At the core of this shift is an agent’s ability to interact with real-world systems through tools and APIs.

The Model Context Protocol (MCP) emerged as an early standard to simplify and unify these interactions. However, as MCP adoption has accelerated, several fundamental limitations around scalability, security, and reliability have surfaced. This article unpacks what MCP is, where it breaks down in real-world agent systems, and how Anthropic’s emerging Skills paradigm aims to address these gaps—pointing toward a more robust and production-ready future for AI agents

What is the Model Context Protocol (MCP)?

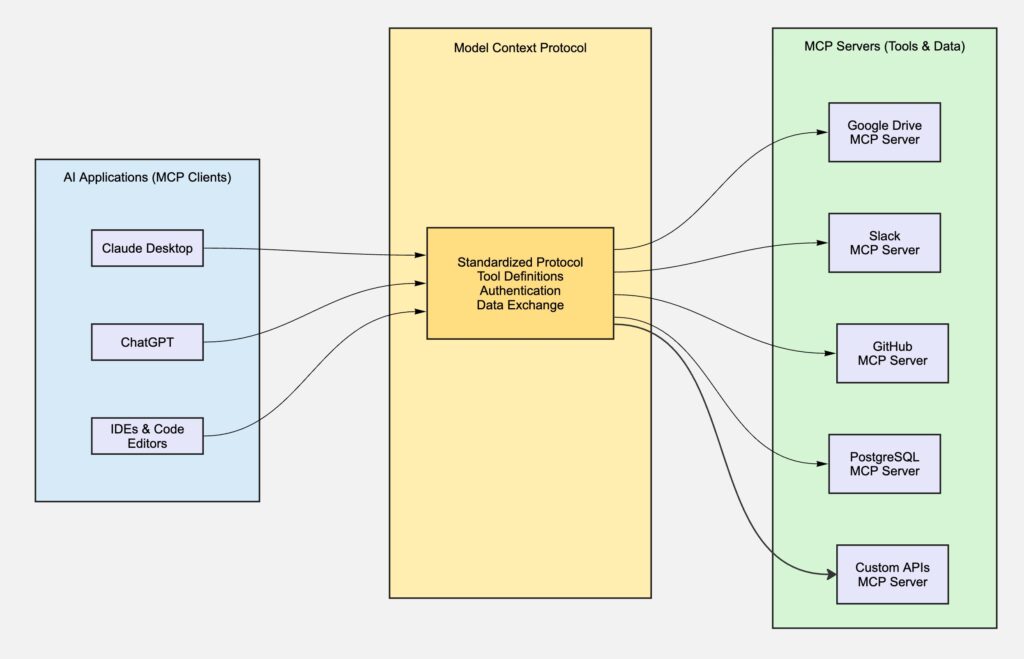

The Model Context Protocol (MCP) is an open-source standard designed to connect AI applications with external systems, such as data sources, tools, and workflows . Think of it as a universal translator, or as Anthropic puts it, a “USB-C port for AI applications” . Just as USB-C provides a standardized way to connect various electronic devices, MCP offers a unified protocol for AI agents to interact with a diverse ecosystem of external resources. This eliminates the need for custom integrations for each new tool or data source, significantly reducing development time and complexity.

At its core, the MCP architecture consists of two main components: MCP Servers, which expose data and tools, and MCP Clients, which are the AI applications that connect to these servers. This simple yet powerful architecture has led to the creation of thousands of MCP servers for popular services like Google Drive, Slack, GitHub, and PostgreSQL, enabling AI agents to perform a wide range of tasks, from accessing your calendar to generating entire web applications from a Figma design.

The Paradigm Shift: Traditional Software vs. AI Agents

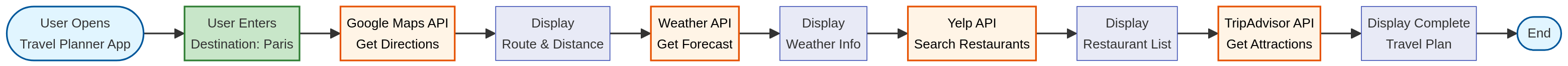

To understand the significance of MCP, it’s crucial to grasp the fundamental difference between traditional software and AI agents. Traditional software, like a travel planner app, operates on a predefined, deterministic workflow. When you input a destination, the application follows a hardcoded path, sequentially calling external APIs like Google Maps, a weather service, and Yelp to gather information. Every step is meticulously planned and executed by human engineers.

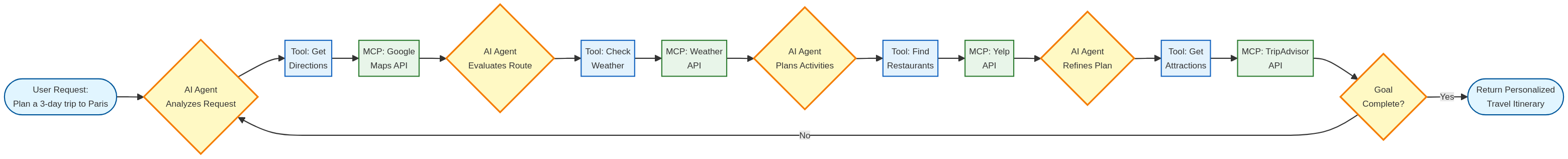

In contrast, AI agents are goal-driven and autonomous. You provide the agent with a high-level objective, and it dynamically plans and executes the necessary steps to achieve it. This involves interpreting the user’s request, selecting the appropriate tools, and processing the results in a continuous loop. The agent’s path is not predetermined and can vary based on the context and available resources. This is where MCP comes in, providing the standardized interface for the agent to discover and utilize the external tools it needs.

The Growing Pains of MCP: A Victim of Its Own Success

While MCP has been instrumental in the rapid advancement of AI agents, its widespread adoption has brought a number of significant challenges to the forefront. These problems, if left unaddressed, threaten to stifle the very innovation that MCP was designed to foster.

1. Tool Definitions Don’t Scale with Context

MCP injects all tool definitions into the model’s prompt upfront. This approach works for a handful of tools but collapses at scale.

As tool count grows:

- Token usage explodes, increasing cost and latency

- The model suffers from “lost-in-the-middle” failures

- Valuable context is consumed before the agent even starts reasoning

In production, context is a scarce resource. MCP spends it too early.

2. Authentication Wasn’t Designed for Multi-User Reality

No Native User Isolation

Most MCP setups rely on a single shared token. There is no first-class concept of user identity, making it unsafe in shared or hosted environments.

No Session or State Awareness

The agent doesn’t inherently know who it is acting on behalf of. Also, no state. Unless developers add custom plumbing, tools can be invoked with the wrong user context.

OAuth Breaks the Agent Loop

Enterprise-grade tools require OAuth, but OAuth was designed for browsers—not autonomous agents. Redirects, localhost constraints, and manual authorization flows force agents out of their execution loop.

No Built-In Failure Strategy

When authentication fails or tokens expire, MCP offers no default recovery pattern. Every team ends up re-implementing retries, fallbacks, and re-auth logic.

At scale, auth is not an edge case—it’s the system.

3. “Always-On” Servers Don’t Match Agent Usage Patterns

Each MCP server typically runs as a continuously active service, regardless of demand.

This leads to:

- Wasted compute and memory

- Higher operational costs

- Complex lifecycle management as tool count increases

Agent workloads are bursty and contextual. MCP servers are not.

4. Large Tool Outputs Quietly Destroy Reliability

Tool responses are injected directly into the LLM context, unfiltered.

When tools return large payloads:

- Context windows are overwhelmed

- Reasoning quality degrades

- Hallucinations become more likely

We’ve seen entire documents—tens of thousands of tokens—dumped into prompts repeatedly. This is not sustainable for production agents.

5. Tooling Is Static in a Dynamic World

Once a session starts, MCP tools are fixed.

You cannot:

- Load tools on demand

- Unload unused tools

- Adapt the tool set as the conversation evolves

This rigidity wastes context and limits architectural flexibility—both unacceptable at scale.

6. Developer Cost Is Higher Than It Should Be

Building an MCP server means implementing a full API surface:

- Tool discovery endpoints

- Schemas and typing

- Input/output contracts

This is heavy engineering for lightweight use cases—and brittle when upstream APIs change. The integration tax is real.

7. Tool Discovery Is Friction-Heavy

Some tools require OAuth before exposing their capabilities.

Users are forced to grant access without understanding:

- What the tool does

- What data it can access

- What actions it can perform

That’s not good security, and it’s worse UX.

8. Consent and Permission Are Left to Chance

MCP does not enforce standards for:

- User consent prompts

- Permission granularity

- Scope limitations

Once connected, tools often expose everything by default. In enterprise environments, this is a governance red flag.

Clarifying the Concepts: A Chef’s Kitchen Analogy

Before diving into how Anthropic’s Skills solve these problems, it’s helpful to clarify the differences between three related concepts: MCP, Sub-agents, and Skills. Imagine a bustling restaurant kitchen run by a master AI agent, the “Head Chef.”

- MCP is the Ingredient Delivery Service: Think of MCP as the restaurant’s truck delivery service. It’s the protocol that goes out into the world to fetch all the raw ingredients (data and tools) from external suppliers (APIs like Google Maps, Yelp, etc.). It’s the essential link to the outside world, but it just delivers the raw materials; it doesn’t know how to cook them.

- Sub-agents are the Specialist Chefs: Sub-agents are like expert chefs in the kitchen—a dessert chef, a soup chef, a grill master. Each is a master of their specific domain. You can hand them a high-level task (“Make a signature dessert”), and they will use their deep expertise to create something amazing. However, they are distinct, often complex agents themselves, and the Head Chef must coordinate their work to create a cohesive final meal.

- Skills are the Recipe Cards and Techniques: Skills are the restaurant’s collection of recipe cards, signature plating techniques, and secret sauces. They are not chefs themselves, but rather the codified knowledge and instructions that any chef can use. When the Head Chef needs to make a specific dish, they don’t have to reinvent the wheel; they pull the relevant recipe card (the Skill) from the library. This card tells them exactly which ingredients to use and how to combine them, ensuring consistency, efficiency, and quality.

With this framework, it becomes clear that these are not mutually exclusive concepts but different layers of abstraction for building capable AI agents.

Anthropic’s New Concept: Skills

In response to these challenges, Anthropic has introduced a new concept called “Skills.” Skills are a more sophisticated and scalable way to equip AI agents with new capabilities, addressing many of the fundamental problems with MCP.

Progressive Disclosure: The Core Principle

The key innovation of Skills is the concept of progressive disclosure. Instead of loading all tool definitions upfront, a Skill only loads a tiny, ~100-token description at startup. The full details of the Skill are only loaded into the context window when the agent determines that it’s relevant to the current task. This simple yet powerful idea has a profound impact on token consumption, reducing the “always-on” cost by as much as 98% .

The Anatomy of a Skill

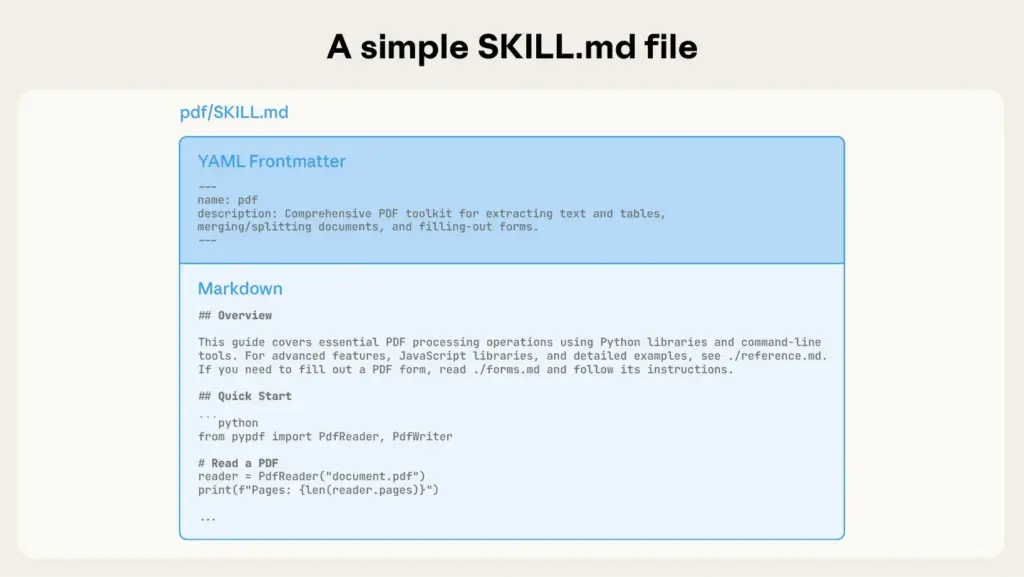

A Skill is essentially a well-organized folder containing a SKILL.md file, which includes the skill’s description and instructions, as well as optional folders for references and scripts. This modular structure allows for the creation of complex, multi-faceted skills that can be easily managed and updated.

Dynamic Tool Discovery

A related innovation (complementary to Skills) is the idea of a Tool Search. Anthropic introduced a “tool search tool” that allows the model to search the available tools by name or capability when it needs something, rather than guessing from a huge list. This is essentially an agent querying an index of tools to find which skill or MCP server might have what it needs. This approach eliminates the need to preload all tool definitions, yet still gives the agent the ability to find any tool on demand (lazy-loading the “manual” for that tool).

On-demand Code Execution

This is the biggest paradigm shift behind Skills. Instead of the LLM calling external APIs and parsing massive JSON responses (as in MCP), Skills let the agent run actual code (e.g. Python or JS) that does the heavy lifting — fetching, processing, and summarizing data — before returning a compact result to the model.

This slashes token usage (Anthropic reported up to 98.7% savings), avoids flooding the context window, and keeps the model focused on just what matters. Because the code handles the task, you can truncate or format outputs before they even reach the model, solving the “response bloat” problem at the source.

Lightweight Infra, Zero Idle Cost

Skills don’t require always-on MCP servers. Each skill is just a folder of instructions and code that lives alongside the agent. It runs only when invoked — no microservices, no polling, no cloud bills for idle processes. Cloudflare’s implementation used V8 isolates to execute tools securely and ephemerally — fast, cheap, and stateless.

Better Security & Auth

With Skills, secrets stay in code — not in the model’s prompt. API keys and tokens can be pulled from secure storage and used internally, never exposed to the LLM. You can build in permission checks, role enforcement, and data redaction directly in the tool logic. No more leaking credentials through hallucinated JSON.

A New Era of AI Agency

Anthropic’s Skills concept represents a significant step forward in the evolution of AI agents. By addressing the fundamental limitations of MCP, Skills pave the way for a new generation of more powerful, scalable, and secure AI applications (hopefully not making it more complicated to build!!). As the AI landscape continues to evolve, it is clear that the future of AI agency lies not just in the power of the models themselves, but in the sophistication of the tools and frameworks that connect them to the world.

Comments

Leave a Comment

No comments yet. Be the first to comment!